Sign up to save your podcasts

Or

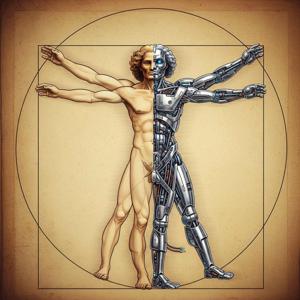

When you first chat with an AI, something feels wonderfully wrong. The responses seem so thoughtful, so surprisingly human, that you almost forget you're talking to a machine. But then that uncomfortable question creeps in: is this thing actually understanding me, or is it just a very clever parrot?

Picture a parrot that's learned to mimic human speech perfectly. It can repeat phrases, even string together sentences that sound meaningful. But we know it's not really understanding—it's just very good at copying patterns. So when an AI gives you advice, cracks a joke, or seems to grasp exactly what you meant, how can you tell the difference?

Picture yourself trying to figure this out. You ask the AI increasingly complex questions, watching for signs of genuine understanding versus mere mimicry. Sometimes its responses surprise you in ways that feel genuinely creative. Other times, you catch it in mistakes that make you wonder if there's really anyone home at all.

Picture the strange position we're all in now. We're having conversations with something that might be incredibly sophisticated... or might be incredibly simple. The experience feels profound, but the doubt lingers. Are we witnessing the dawn of machine understanding, or are we being fooled by the most convincing mimicry ever created?

This question matters more than mere curiosity. If we're going to live and work alongside these systems, we need to understand what we're actually dealing with. The answer turns out to be both simpler and more surprising than most people imagine.

What's really happening when you chat with AI? The truth challenges everything we think we know about how smart behavior emerges, and reveals something remarkable about the nature of understanding itself.

Join Ash Stuart as he reveals what's really going on behind those convincingly human responses, and why the parrot comparison might be exactly the wrong way to think about artificial intelligence.

Audio generated by AI

View all episodes

View all episodes

By Ash Stuart

By Ash Stuart

When you first chat with an AI, something feels wonderfully wrong. The responses seem so thoughtful, so surprisingly human, that you almost forget you're talking to a machine. But then that uncomfortable question creeps in: is this thing actually understanding me, or is it just a very clever parrot?

Picture a parrot that's learned to mimic human speech perfectly. It can repeat phrases, even string together sentences that sound meaningful. But we know it's not really understanding—it's just very good at copying patterns. So when an AI gives you advice, cracks a joke, or seems to grasp exactly what you meant, how can you tell the difference?

Picture yourself trying to figure this out. You ask the AI increasingly complex questions, watching for signs of genuine understanding versus mere mimicry. Sometimes its responses surprise you in ways that feel genuinely creative. Other times, you catch it in mistakes that make you wonder if there's really anyone home at all.

Picture the strange position we're all in now. We're having conversations with something that might be incredibly sophisticated... or might be incredibly simple. The experience feels profound, but the doubt lingers. Are we witnessing the dawn of machine understanding, or are we being fooled by the most convincing mimicry ever created?

This question matters more than mere curiosity. If we're going to live and work alongside these systems, we need to understand what we're actually dealing with. The answer turns out to be both simpler and more surprising than most people imagine.

What's really happening when you chat with AI? The truth challenges everything we think we know about how smart behavior emerges, and reveals something remarkable about the nature of understanding itself.

Join Ash Stuart as he reveals what's really going on behind those convincingly human responses, and why the parrot comparison might be exactly the wrong way to think about artificial intelligence.

Audio generated by AI