Sign up to save your podcasts

Or

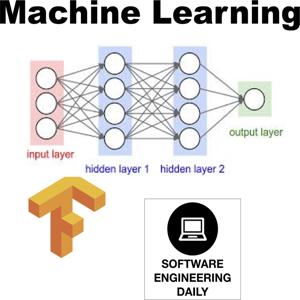

Training a deep learning model involves operations over tensors. A tensor is a multi-dimensional array of numbers. For several years, GPUs were used for these linear algebra calculations. That’s because graphics chips are built to efficiently process matrix operations.

Tensor processing consists of linear algebra operations that are similar in some ways to graphics processing–but not identical. Deep learning workloads do not run as efficiently on these conventional GPUs as they would on specialized chips, built specifically for deep learning.

In order to train deep learning models faster, new hardware needs to be designed with tensor processing in mind.

Xin Wang is a data scientist with the artificial intelligence products group at Intel. He joins today’s show to discuss deep learning hardware and Flexpoint, a way to improve the efficiency of space that tensors take up on a chip. Xin presented his work at NIPS, the Neural Information Processing Systems conference, and we talked about what he saw at NIPs that excited him. Full disclosure: Intel, where Xin works, is a sponsor of Software Engineering Daily.

The post Deep Learning Hardware with Xin Wang appeared first on Software Engineering Daily.

View all episodes

View all episodes

By Machine Learning Archives - Software Engineering Daily

By Machine Learning Archives - Software Engineering Daily

4.4

6969 ratings

Training a deep learning model involves operations over tensors. A tensor is a multi-dimensional array of numbers. For several years, GPUs were used for these linear algebra calculations. That’s because graphics chips are built to efficiently process matrix operations.

Tensor processing consists of linear algebra operations that are similar in some ways to graphics processing–but not identical. Deep learning workloads do not run as efficiently on these conventional GPUs as they would on specialized chips, built specifically for deep learning.

In order to train deep learning models faster, new hardware needs to be designed with tensor processing in mind.

Xin Wang is a data scientist with the artificial intelligence products group at Intel. He joins today’s show to discuss deep learning hardware and Flexpoint, a way to improve the efficiency of space that tensors take up on a chip. Xin presented his work at NIPS, the Neural Information Processing Systems conference, and we talked about what he saw at NIPs that excited him. Full disclosure: Intel, where Xin works, is a sponsor of Software Engineering Daily.

The post Deep Learning Hardware with Xin Wang appeared first on Software Engineering Daily.

289 Listeners

1,856 Listeners

476 Listeners

623 Listeners

583 Listeners

299 Listeners

213 Listeners

348 Listeners

773 Listeners

987 Listeners

267 Listeners

210 Listeners

206 Listeners

200 Listeners

228 Listeners