Bad Faith Cycles in Algorithmic Cultivation, an interview-based podcast series that explores identity, [1] defined as who we are and what we do, [2] and agency, defined as the sum total range of potential actions or our ability to make a difference, [3] in our contemporary digitally and algorithmically mediated lives.

The datafication of consumers, and subsequent tailoring of a digital experience, has developed digital echo chambers. Echo chambers refer to situations where an individual is repeatedly exposed to the same perspective of knowledge. [4]

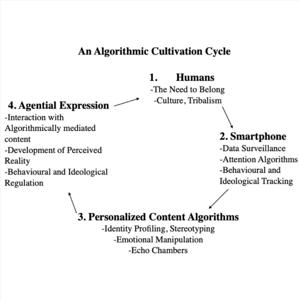

I apply the media effects model of cultivation theory to digital echo chambers prompted through personalized content algorithms to explore the ways that data and identity-based content targeting may impact an individual’s agency through pre-determining the digital content that influence their framing of reality and beliefs. This process, Algorithmic Cultivation, raises important considerations about who we are and how we come to be in data-based systems.

Personalized content algorithms operate in a cyclical manner to provide users targeted content. They start with identity factors and then draw from previous browsing habits to predict behaviour and make content suggestions. Every interaction or lack of interaction with any piece of suggested content provides feedback to a personalized content algorithm, which then uses this new information to further suggest content, and the cycle goes on and on. French Philosopher Gilles Deleuze’s concept of the Control Society is useful for exploring the contemporary digital networks and data systems that are attributed a form of power over social life. [5] The Control Society tells us that surveillance and data systems self-regulate the power dynamics of social life through algorithmic profiling of identities that maintain the patterns in a society that individual identities are subject to. [6] Through this lens, it should be understood that personalized content algorithms are an expression of the commercial interests of datafication, and although these are distinct from algorithms used in civic or political life such as sentencing algorithms, personalized content algorithms also have important implications onto the regulations of social life.

[1] Anthony Giddens, Modernity and Self-Identity: Self and Society in the Late Modern Age, 1. publ. in the U.S.A (Stanford, Calif: Stanford Univ. Press, 1997).

[2] Jonathan M. Cheek, “Identity Orientations and Self-Interpretation,” in Personality Psychology, ed. David M. Buss and Nancy Cantor (New York, NY: Springer US, 1989), 275–85, https://doi.org/10.1007/978-1-4684-0634-4_21.

[3] Bruno Latour, Reassembling the Social: An Introduction to Actor-Network Theory., 2005.

[4] Pablo Barberá et al., “Tweeting From Left to Right: Is Online Political Communication More Than an Echo Chamber?,” Psychological Science 26, no. 10 (October 2015): 1531–42, https://doi.org/10.1177/0956797615594620.

[5] Gilles Deleuze, “Postscript on the Societies of Control,” October 59 (1992): 3–7, http://www.jstor.org/stable/778828.

[6] Kurt Iveson and Sophia Maalsen, “Social Control in the Networked City: Datafied Dividuals, Disciplined Individuals and Powers of Assembly,” Environment and Planning D: Society and Space 37, no. 2 (April 2019): 331–49, https://doi.org/10.1177/0263775818812084.